We’ve all read “The Lean Startup.” We get the theory. But when it’s time to ship, somehow we mess it up anyway.

The number one mistake? Treating the MVP like a mini version of the final product — smaller, scrappier, but still held to the same success metrics as a full launch.

So we ship it (maybe call it a “beta”), and hope for activation, engagement, retention. If the numbers are good, we high-five. If they’re bad, we panic.

Either way, we often forget to ask why.

And if you can’t answer why, your MVP has failed. Because learning, not launching, is the entire reason why your MVP exists.

Let’s rewind.

Can your MVP validate your riskiest assumptions?

Your MVP exists to test your riskiest assumptions, not to become a feature-lite version of your dream product.

I advise every team I work with to use David Bland’s assumptions mapping framework. It forces you to get honest about what really needs to be true for your idea to work.

Assumptions mapping

With assumptions mapping, your session participants generate assumptions alone, not in a group setting, to avoid group-think and get as much diversity as possible.

Each person writes down as many assumptions as possible for the proposed solution.

For example, the prompt might be: “What needs to be true for this idea to succeed?”

Then you bucket those assumptions into five categories:

- Desirability – Do users want this?

- Feasibility – Can we build this?

- Viability – Can we profit from this?

- Usability – Will people understand how to use it?

- Ethics – Would your mom cringe if she found out how we did this?

Tip: Use color-coding to keep things balanced. I’ve seen technical teams generate 90 percent of their assumptions in the “feasibility” category, casually ignoring whether it’s a problem worth solving in the first place.

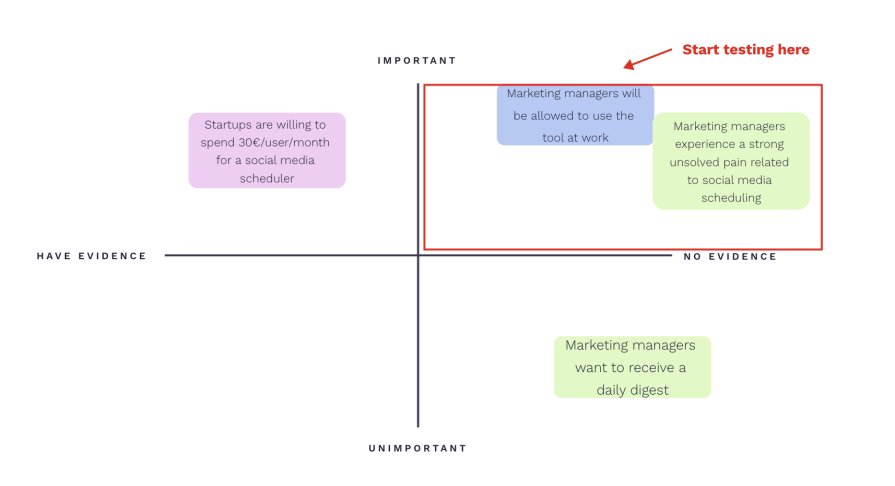

Once you’ve got your list, map the assumptions:

- X-axis = From no evidence to have evidence

- Y-axis = From unimportant to important

Your riskiest assumptions live in the top-left quadrant: important and no evidence. That’s where your test strategy should focus first:

When generating assumptions some of the biggest mistakes teams make include:

- Starting the exercise without aligning on what the assumptions should be about. For example, do you generate assumptions about your ICP, a job to be done, solution idea one, or solution idea two?

- Not generating enough assumptions. Keep going until it hurts. Write down everything that pops into your head. You’ll cut it back down to the most important ones later on

- Treating assumptions mapping as a one-off exercise. The riskiest assumptions map is a living document, and the cornerstone of your test strategy. You’re supposed to update it as you learn and shift unknowns into the “have evidence” zone

- Generating assumptions in a group setting. Groupthink kills risk-spotting. Always start solo. Then, compare and cluster

- Failing to generate assumptions across all five categories (feasibility, desirability, viability, usability, ethics)

Identifying the right test methods to validate assumptions

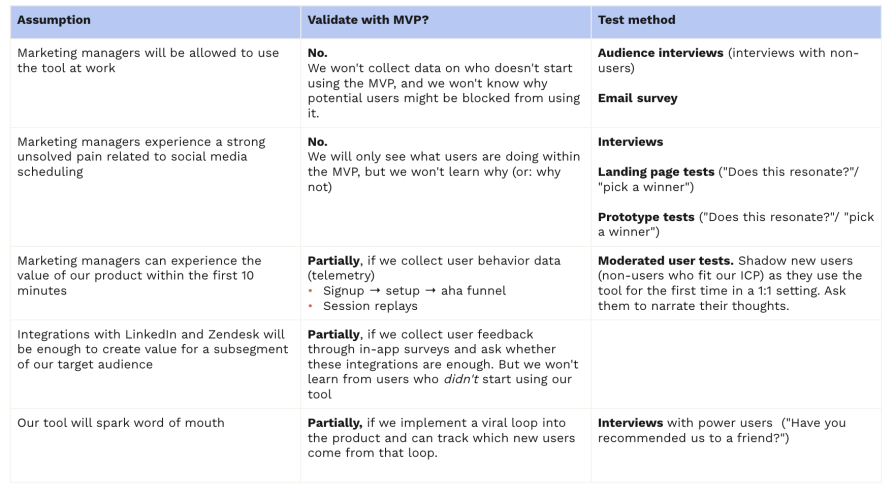

Let’s assume you’ve identified the following assumptions as the riskiest ones:

- Marketing managers will be allowed to use the tool at work

- Marketing managers experience a strong unsolved pain related to social media scheduling

- Marketing managers can experience the value of our product within the first 10 minutes

- Integrations with LinkedIn and Zendesk will be enough to create value for a subsegment of our target audience

- Our tool will spark word of mouth

Is an MVP the best way to validate these, or should you use another test method?

Top tips to set yourself up for maximum learning

The number MVP mistake? Skipping the assumptions-mapping step altogether, and instead trying to test the idea as a whole. When your MVP fails to hit its goal, you’re left staring at a number, with no idea why.

Try these two tips to maximize your learning:

- Run qualitative tests before and during your MVP phase to understand:

- The most likely reasons why prospects fitting your ICP failed to convert. Your MVP will only show you the behavior of the people who converted

- The why behind the what. An MVP shows you what users do in your product, but not why they do it

- Make sure your MVP captures key metrics, like signup, setup, aha, and eureka events, or adoption and engagement with core features

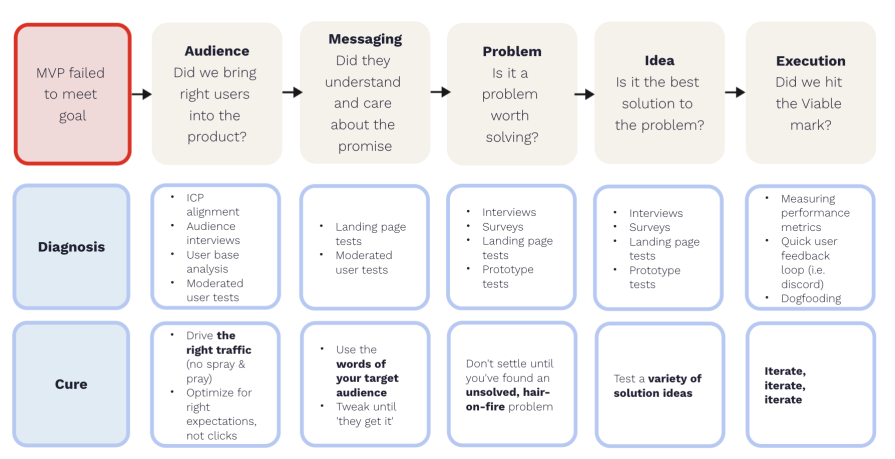

Can you isolate your point of failure? The AMPIE framework

During my 15 years of working with early-stage startups I’ve identified five reasons why MVPs fail by using the AMPIE framework (audience, messaging, problem, idea, execution):

1. Audience: You brought the wrong users into your product

In my experience, great products are built to solve the specific pain point of a narrow target audience. Trying to serve everyone at once leads to mediocrity (at best).

One of the biggest potential failures of an MVP occurs if the users who came into your product weren’t the users you built the product for. They came in with a different set of needs and were never going to be successful in your product.

If you follow a spray and pray user acquisition strategy, optimized for clicks but not for the truth, you’ll likely see a spike in sign up rates, but kill your user retention rates.

This is also why I push back on strategies like “let’s give it away for free and see what people do.” If you’re watching the wrong people, the data is garbage.

What to do instead:

- Define your ICP before you build, not after. Interviews and qualitative (moderated) tests can help you understand better what niche ICP to build for

- Run qualitative interviews to deeply understand their specific pain and context

- Drive traffic intentionally. Get the right users to your product. Optimize for setting the right expectation, not for clicks

- Disregard feedback from non-ICP users. It’s noise, not signal

Defining, and continuously refining your ICP is step zero. It sets the entire MVP up for signal-rich learning instead of misleading noise.

2. Messaging: Did they understand and care about the promise?

Let’s assume that users can self-serve their way into your product via your landing page. Bad messaging can kill you in two ways:

- Low visit → signup rate. Your value prop doesn’t resonate or isn’t understood

- Low signup → aha conversion or retention. People expected one thing and got another. Your landing page made a promise your product didn’t keep

You can test this with landing page tests (borrowed from Matt Lerner’s company Systm):

- Mock up your landing page within 15 minutes. Replit, Lovable, and v0 have made this 10x faster

- Run 1:1 moderated tests with 10 to 15 people

- Headline clarity test

- Show the page for five seconds.

- Hide it. Ask: “What was that? What do they help you with?”

- Repeat after ten seconds. Did understanding improve?

- Narrated walkthrough

- Now send them the link, and let them scroll freely

- Ask them to think out loud. Listen for confusion, boredom, or “meh” moments

- Compare and contrast

- Show two to five different landing pages:

- Different value props for the same pain

- Or different problems you could solve

- Ask them to pick a winner

- Show two to five different landing pages:

This test gives you brutal clarity on two questions:

- Do people understand your solution?

- Do people want your solution?

3. Problem: Is it a problem worth solving?

Assuming you have the right users and the landing page worked… maybe the problem isn’t a hair-on-fire one. Maybe they’re fine with existing alternatives. More audience or user interviews, fake door marketing tests, and expert conversations can sniff this out.

4. Idea: Is it the best solution-idea?

Maybe the problem is real, but your take on solving it isn’t resonating.

I’m a big fan of user labs to gauge excitement levels about a prototype:

- Invite about 10-15 testers to 1:1 sessions during where they try a prototype for the first time

- You can ask your testers to complete tasks, or to simply do what they would usually do if they were to try it for the first time

- Instead of betting the house on one idea, present your user lab testers with three to five prototypes for the same problem. Have them pick a winner. Tools like Replit, Lovable, or V0 make it easy to rapidly spin up prototypes for multiple solutions, especially if you specify that you only need a clickable frontend

5. Execution: Did you hit the right quality level?

Did you hit the viable mark in the term minimum viable product?

Execution matters. If your MVP is too buggy, slow, or confusing, you didn’t test your idea, you tested your team’s ability to ship under pressure. Some target audiences will have higher tolerance for paper cuts than others. If your MVP quality isn’t enough to give you a real signal about your target audience, improve it.

You don’t need a polished product, but it needs to be good enough to learn from:

TL;DR: Learning beats winning

Most MVPs don’t get it right the first time. That’s not the problem. The problem is when you don’t know why it didn’t work. That’s where teams get stuck.

If you can isolate the “why” and validate your riskiest assumptions, then your MVP did its job.

Remember, success isn’t 5,000 active users — success is knowing what to do next.

Featured image source: IconScout

The post Your MVP didn’t fail; you didn’t set it up to teach you anything appeared first on LogRocket Blog.